Channel bonding testing with iperf¶

This page reports recommendations on how to execute network performance tests with iperf in a channel bonding scenario. In particular, we assume a setup with 4 Broadcom 10Gbps NICs in bonding but the recommendations should be applicable to similar setups.

First check the current configuration and transmit hash policy [1]:

# cat /proc/net/bonding/bond0 | grep "Interface"

Slave Interface: eno4

Slave Interface: eno3

Slave Interface: eno2

Slave Interface: eno1

# cat /proc/net/bonding/bond0 | head

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer3+4 (1)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Peer Notification Delay (ms): 0

When performing the tests:

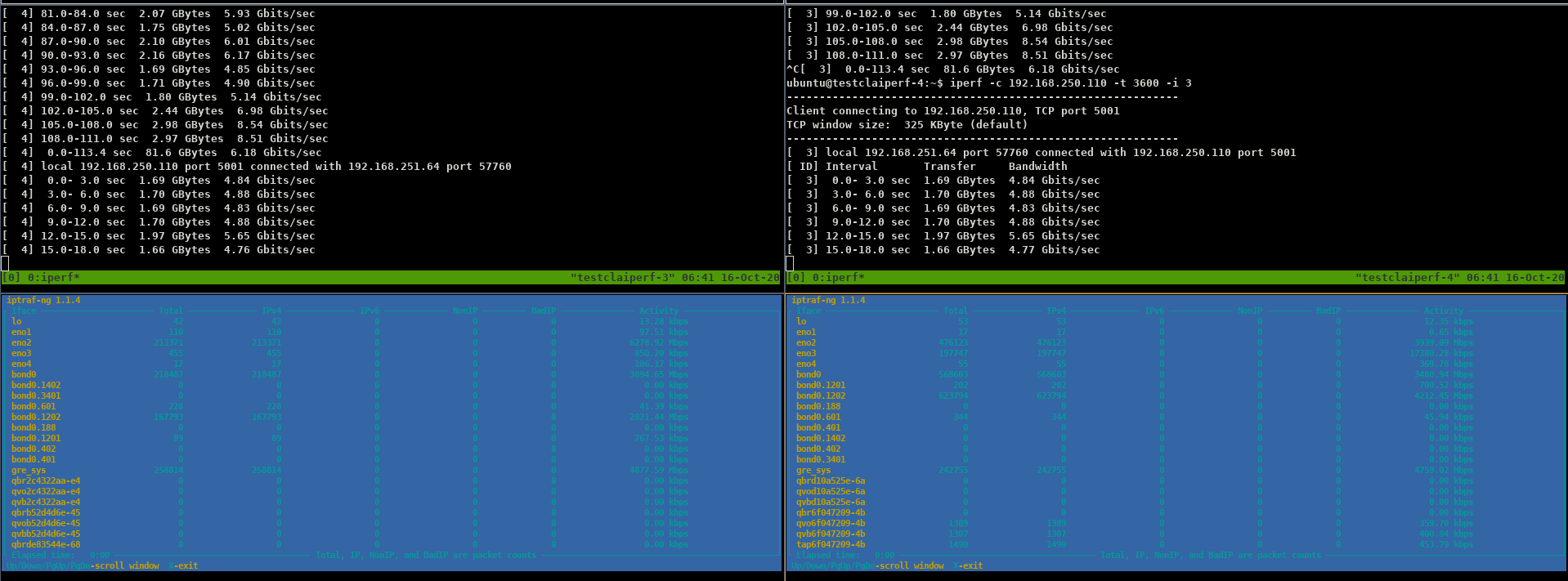

monitor the interfaces’ general statitics using iptraf-ng [2]

use iperf [3] on both sides

you probably don’t need iperf’s

-P“parallel streams” option. Servers can usually generate 10Gbps on a single stream (and please note that iperf3 would use a single CPU [4]). Better start different iperf servers on different ports.on the server side, for TCP you can use:

iperf -s -i 3 -p 5002on the client side, for TCP you can use:

iperf -c <dest ip address> -i 3 -p 5002 -t 3600on the server side, for UDP you can use:

iperf -s -i 3 -u -p 5002on the client side, for UDP you can use:

iperf -c <dest ip address> -i 3 -p 5002 -t 3600 -u -b 12Gwhen testing UDP, the performance might be only a fraction of the one obtained for TCP. This may be due to missing/untriggered hardware acceleration on the NIC

you will probably notice that sometimes different streams employ the same physical interface in the bonding. This may be due to the configured transmit hash policy which computes the same hash for both streams. Depending on the configured transmit hash policy stopping and restarting the iperf client will change the source port and can trigger a different physical interface

the Linux transmit hash policy algorithm may differ from the algorithm used by your switch. Check the hash policy documentation [1] and use the source (Luke) [5]

take into account that in OpenStack the VM traffic is encapsulated in other tunneling protocols (e.g. GRE, VXLAN), thus the transmit hash policy algorithm will be applied to the tunnel external headers

try to use VMs on different hosts to see if different physical interfaces are employed

you probably don’t need NUMA based optimizations [6] [7] [8] [9] if you have 10Gbps NICs

| [1] | (1, 2) https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/sec-using_channel_bonding |

| [2] | https://github.com/iptraf-ng/iptraf-ng |

| [3] | https://iperf.fr/iperf-doc.php |

| [4] | https://fasterdata.es.net/performance-testing/network-troubleshooting-tools/iperf/multi-stream-iperf3/ |

| [5] | https://github.com/torvalds/linux/tree/master/drivers/net/bonding https://elixir.bootlin.com/linux/latest/source/drivers/net/bonding/bond_main.c#L3429 |

| [6] | https://dropbox.tech/infrastructure/optimizing-web-servers-for-high-throughput-and-low-latency |

| [7] | https://h50146.www5.hpe.com/products/software/oe/linux/mainstream/support/whitepaper/pdfs/4AA4-9294ENW-2013.pdf |

| [8] | https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/5/html/virtualization/ch33s08 |

| [9] | https://fasterdata.es.net/host-tuning/linux/100g-tuning/ |